Maximum subarray problem: Difference between revisions

m Dating maintenance tags: {{Cn}} {{Deadlink}} |

well, for starters, streamline repeat cite syntax |

||

| Line 4: | Line 4: | ||

In [[computer science]], the '''maximum subarray problem''' is the task of finding a contiguous subarray with the largest sum, within a given one-dimensional [[array data structure|array]] A[1...n] of numbers. Formally, the task is to find indices <math>i</math> and <math>j</math> with <math>1 \leq i \leq j \leq n </math>, such that the sum |

In [[computer science]], the '''maximum subarray problem''' is the task of finding a contiguous subarray with the largest sum, within a given one-dimensional [[array data structure|array]] A[1...n] of numbers. Formally, the task is to find indices <math>i</math> and <math>j</math> with <math>1 \leq i \leq j \leq n </math>, such that the sum |

||

: <math>\sum_{x=i}^j A[x] </math> |

: <math>\sum_{x=i}^j A[x] </math> |

||

is as large as possible. (Some formulations of the problem also allow the empty subarray to be considered; by convention, [[empty sum|the sum of all values of the empty subarray]] is zero.) Each number in the input array A could be positive, negative, or zero. |

is as large as possible. (Some formulations of the problem also allow the empty subarray to be considered; by convention, [[empty sum|the sum of all values of the empty subarray]] is zero.) Each number in the input array A could be positive, negative, or zero.{{r|Bentley.1989|p=69}} |

||

For example, for the array of values [−2, 1, −3, 4, −1, 2, 1, −5, 4], the contiguous subarray with the largest sum is [4, −1, 2, 1], with sum 6. |

For example, for the array of values [−2, 1, −3, 4, −1, 2, 1, −5, 4], the contiguous subarray with the largest sum is [4, −1, 2, 1], with sum 6. |

||

| Line 13: | Line 13: | ||

# Several different sub-arrays may have the same maximum sum. |

# Several different sub-arrays may have the same maximum sum. |

||

This problem can be solved using several different algorithmic techniques, including brute force, |

This problem can be solved using several different algorithmic techniques, including brute force,{{r|Bentley.1989|p=70}} divide and conquer,{{r|Bentley.1989|p=73}} dynamic programming,{{r|Bentley.1989|p=74}} and reduction to shortest paths.{{cn|date=October 2019}} |

||

== History == |

== History == |

||

| Line 24: | Line 24: | ||

| pages = 865–873 |

| pages = 865–873 |

||

| doi = 10.1145/358234.381162 }}</ref> Grenander was looking to find a rectangular subarray with maximum sum, in a two-dimensional array of real numbers. A brute-force algorithm for the two-dimensional problem runs in ''O''(''n''<sup>6</sup>) time; because this was prohibitively slow, Grenander proposed the one-dimensional problem to gain insight into its structure. Grenander derived an algorithm that solves the one-dimensional problem in ''O''(''n''<sup>2</sup>) time,<ref>by using a precomputed table of cumulative sums <math>S[k] = \sum_{x=1}^k A[x]</math> to compute the subarray sum <math>\sum_{x=i}^j A[x] = S[j] - S[i-1]</math> in constant time</ref> improving the brute force running time of ''O''(''n''<sup>3</sup>). When [[Michael Shamos]] heared about the problem, he overnight devised an ''O''(''n'' log ''n'') [[divide-and-conquer algorithm]] for it. |

| doi = 10.1145/358234.381162 }}</ref> Grenander was looking to find a rectangular subarray with maximum sum, in a two-dimensional array of real numbers. A brute-force algorithm for the two-dimensional problem runs in ''O''(''n''<sup>6</sup>) time; because this was prohibitively slow, Grenander proposed the one-dimensional problem to gain insight into its structure. Grenander derived an algorithm that solves the one-dimensional problem in ''O''(''n''<sup>2</sup>) time,<ref>by using a precomputed table of cumulative sums <math>S[k] = \sum_{x=1}^k A[x]</math> to compute the subarray sum <math>\sum_{x=i}^j A[x] = S[j] - S[i-1]</math> in constant time</ref> improving the brute force running time of ''O''(''n''<sup>3</sup>). When [[Michael Shamos]] heared about the problem, he overnight devised an ''O''(''n'' log ''n'') [[divide-and-conquer algorithm]] for it. |

||

Soon after, Shamos described the one-dimensional problem and its history at a [[Carnegie Mellon University]] seminar attended by [[Jay Kadane]], who designed within a minute an ''O''(''n'')-time algorithm, |

Soon after, Shamos described the one-dimensional problem and its history at a [[Carnegie Mellon University]] seminar attended by [[Jay Kadane]], who designed within a minute an ''O''(''n'')-time algorithm,{{r|Bentley.1984}}<ref name="Bentley.1989">{{cite book |

||

| isbn=0-201-10331-1 |

| isbn=0-201-10331-1 |

||

| author=Jon Bentley |

| author=Jon Bentley |

||

| Line 38: | Line 38: | ||

| volume=2 |

| volume=2 |

||

| pages=207–241 |

| pages=207–241 |

||

| year=1982 }}</ref>{{rp|211}} which is clearly as fast as possible. In 1982, [[David Gries]] obtained the same ''O''(''n'')-time algorithm by applying [[Edsger W. Dijkstra |Dijkstra]]'s "standard strategy"; |

| year=1982 }}</ref>{{rp|211}} which is clearly as fast as possible. In 1982, [[David Gries]] obtained the same ''O''(''n'')-time algorithm by applying [[Edsger W. Dijkstra |Dijkstra]]'s "standard strategy";{{r|Gries.1982}} in 1989, [[Richard Bird (computer scientist)|Richard Bird]] derived it by purely algebraic manipulation of the brute-force algorithm using the [[Bird–Meertens formalism]].<ref>{{cite journal |

||

| url=http://comjnl.oxfordjournals.org/content/32/2/122.full.pdf |

| url=http://comjnl.oxfordjournals.org/content/32/2/122.full.pdf |

||

| author=Richard S. Bird |

| author=Richard S. Bird |

||

| Line 107: | Line 107: | ||

As a [[loop invariant]], in the <math>j</math>th step, the old value of <code>current_sum</code> holds the maximum over all <math>i \in \{ 1, ..., j \}</math> of the sum <math>A[i]+...+A[j-1]</math>.<ref>This sum is <math>0</math> when <math>i=j</math>, corresponding to the empty subarray <math>A[j \; ... \; j-1]</math>.</ref> Therefore, <code>current_sum</code><math>+A[j]</math><ref>In the Python code, <math>A[j]</math> is expressed as <code>x</code>, with the index <math>j</math> left implicit.</ref> is the maximum over all <math>i \in \{ 1, ..., j \}</math> of the sum <math>A[i]+...+A[j]</math>. To extend the latter maximum to cover also the case <math>i=j+1</math>, it is sufficient to consider also the empty subarray <math>A[j+1 \; ... \; j]</math>. This is done in line 5 by assigning <math>\max(0,</math><code>current_sum</code><math>+A[j])</math> as the new value of <code>current_sum</code>, which after that holds the maximum over all <math>i \in \{ 1, ..., j+1 \}</math> of the sum <math>A[i]+...+A[j]</math>. |

As a [[loop invariant]], in the <math>j</math>th step, the old value of <code>current_sum</code> holds the maximum over all <math>i \in \{ 1, ..., j \}</math> of the sum <math>A[i]+...+A[j-1]</math>.<ref>This sum is <math>0</math> when <math>i=j</math>, corresponding to the empty subarray <math>A[j \; ... \; j-1]</math>.</ref> Therefore, <code>current_sum</code><math>+A[j]</math><ref>In the Python code, <math>A[j]</math> is expressed as <code>x</code>, with the index <math>j</math> left implicit.</ref> is the maximum over all <math>i \in \{ 1, ..., j \}</math> of the sum <math>A[i]+...+A[j]</math>. To extend the latter maximum to cover also the case <math>i=j+1</math>, it is sufficient to consider also the empty subarray <math>A[j+1 \; ... \; j]</math>. This is done in line 5 by assigning <math>\max(0,</math><code>current_sum</code><math>+A[j])</math> as the new value of <code>current_sum</code>, which after that holds the maximum over all <math>i \in \{ 1, ..., j+1 \}</math> of the sum <math>A[i]+...+A[j]</math>. |

||

Thus, the problem can be solved with the following code, |

Thus, the problem can be solved with the following code,{{r|Bentley.1989|p=74}}{{r|Gries.1982|p=211}} expressed here in [[Python (programming language)|Python]]: |

||

<source lang="python" line="1"> |

<source lang="python" line="1"> |

||

| Line 119: | Line 119: | ||

</source> |

</source> |

||

This version of the algorithm will return 0 if the input contains no positive elements (including when the input is empty). For the variant of the problem which disallows empty subarrays, <code>best_sum</code> should be initialized to negative infinity instead |

This version of the algorithm will return 0 if the input contains no positive elements (including when the input is empty). For the variant of the problem which disallows empty subarrays, <code>best_sum</code> should be initialized to negative infinity instead{{r|Bentley.1989|p=78,171}} and also in the for loop <code>current_sum</code> should be updated as <code>max(x, current_sum + x)</code>.<ref>While the latter modification is not mentioned by Bentley (1989), it achieves maintaining the modified loop invariant <code>current_sum</code><math>=\max_{i \in \{ 1, ..., j \}} A[i]+...+A[j]</math> at the beginning of the <math>j</math>th step.</ref> In that case, if the input contains no positive element, the returned value is that of the largest element (i.e., the least negative value), or negative infinity if the input was empty. |

||

The algorithm can be modified to keep track of the starting and ending indices of the maximum subarray as well: |

The algorithm can be modified to keep track of the starting and ending indices of the maximum subarray as well: |

||

| Line 149: | Line 149: | ||

Because of the way this algorithm uses optimal substructures (the maximum subarray ending at each position is calculated in a simple way from a related but smaller and overlapping subproblem: the maximum subarray ending at the previous position) this algorithm can be viewed as a simple/trivial example of [[dynamic programming]]. |

Because of the way this algorithm uses optimal substructures (the maximum subarray ending at each position is calculated in a simple way from a related but smaller and overlapping subproblem: the maximum subarray ending at the previous position) this algorithm can be viewed as a simple/trivial example of [[dynamic programming]]. |

||

The runtime complexity of Kadane's algorithm is <math>O(n)</math>. |

The runtime complexity of Kadane's algorithm is <math>O(n)</math>.{{r|Bentley.1989|p=74}}{{r|Gries.1982|p=211}} |

||

== Generalizations == |

== Generalizations == |

||

Revision as of 19:05, 11 October 2019

This article has an unclear citation style. (January 2018) |

In computer science, the maximum subarray problem is the task of finding a contiguous subarray with the largest sum, within a given one-dimensional array A[1...n] of numbers. Formally, the task is to find indices and with , such that the sum

is as large as possible. (Some formulations of the problem also allow the empty subarray to be considered; by convention, the sum of all values of the empty subarray is zero.) Each number in the input array A could be positive, negative, or zero.[1]: 69

For example, for the array of values [−2, 1, −3, 4, −1, 2, 1, −5, 4], the contiguous subarray with the largest sum is [4, −1, 2, 1], with sum 6.

Some properties of this problem are:

- If the array contains all non-negative numbers, then the problem is trivial; the maximum subarray is the entire array.

- If the array contains all non-positive numbers, then the solution is the number in the array with the smallest absolute value (or the empty subarray, if it is permitted).

- Several different sub-arrays may have the same maximum sum.

This problem can be solved using several different algorithmic techniques, including brute force,[1]: 70 divide and conquer,[1]: 73 dynamic programming,[1]: 74 and reduction to shortest paths.[citation needed]

History

The maximum subarray problem was proposed by Ulf Grenander in 1977 as a simplified model for maximum likelihood estimation of patterns in digitized images.[2] Grenander was looking to find a rectangular subarray with maximum sum, in a two-dimensional array of real numbers. A brute-force algorithm for the two-dimensional problem runs in O(n6) time; because this was prohibitively slow, Grenander proposed the one-dimensional problem to gain insight into its structure. Grenander derived an algorithm that solves the one-dimensional problem in O(n2) time,[3] improving the brute force running time of O(n3). When Michael Shamos heared about the problem, he overnight devised an O(n log n) divide-and-conquer algorithm for it. Soon after, Shamos described the one-dimensional problem and its history at a Carnegie Mellon University seminar attended by Jay Kadane, who designed within a minute an O(n)-time algorithm,[2][1]: 76–77 [4]: 211 which is clearly as fast as possible. In 1982, David Gries obtained the same O(n)-time algorithm by applying Dijkstra's "standard strategy";[4] in 1989, Richard Bird derived it by purely algebraic manipulation of the brute-force algorithm using the Bird–Meertens formalism.[5]

Grenander's two-dimensional generalization can be solved in O(n3) time either by using Kadane's algorithm as a subroutine, or through a divide-and-conquer approach. Slightly faster algorithms based on distance matrix multiplication have been proposed by Hisao Tamaki and Takeshi Tokuyama in 1998[6] and by Takaoka (2002). There is some evidence that no significantly faster algorithm exists; an algorithm that solves the two-dimensional maximum subarray problem in O(n3−ε) time, for any ε>0, would imply a similarly fast algorithm for the all-pairs shortest paths problem.[7]

Applications

This section needs attention from an expert in Computational biology. The specific problem is: fix inline tags. (September 2019) |

Maximum subarray algorithms are used for data analysis in many fields, such as genomic sequence analysis, computer vision, and data mining.

Genomic sequence analysis employs maximum subarray algorithms to identify important biological segments of protein sequences and the information for the purpose of predicting outcomes[clarify]. As an example, specific information of a protein sequence can be organized into a linear function which can be used to understand the structure and function of a protein.[clarify] Biologists find this approach to be efficient and easy to analyze their data.[weasel words]

The score-based technique of finding the segment with the highest total sum is used in many problems similar to the MSP. In genomic sequence analysis, these problems include conserved segments, GC-rich regions, tandem repeats, low-complexity filter, DNA binding domains, and regions of high charge.[citation needed]

For computer vision , maximum subarray algorithms are used in bitmap images to detect the highest score subsequence which represents the brightest area in an image. The image is a two dimensional array of positive values that corresponds to the brightness of a pixel. The algorithm is evaluated after normalizing every value in the array by subtracting them from the mean brightness.

Data mining is an application of maximum subarray algorithms with numerical attributes. To understand the role of the maximum subarray problem in data mining it is important to be familiar with the association rule and its parts. The association rule is an if/then statement that creates relationships between unrelated pieces of data. The two parts of the association rule are the antecedent (if statement) and the consequent (then statement). In business applications of data mining, association rules are important in examining and foreseeing customer's actions/behavior. Data mining is used for companies to anticipate common trends, by representing problems with maximum subarray problem into an sub-array to be normalized and solved. The highest result[clarify] of the array will give companies information of what customers are responding well to and will influence the companies' actions as a result.[citation needed]

Kadane's algorithm

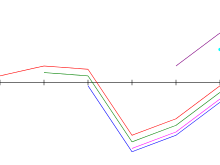

| Example run |

|---|

|

Kadane's algorithm scans the given array from left to right.

In the th step, it computes the subarray with the largest sum ending at ; this sum is maintained in variable current_sum.[8]

Moreover, it computes the subarray with the largest sum anywhere in , maintained in variable best_sum,[9] and easily obtained as the maximum of all values of current_sum seen so far, cf. line 6 of the algorithm.

As a loop invariant, in the th step, the old value of current_sum holds the maximum over all of the sum .[10] Therefore, current_sum[11] is the maximum over all of the sum . To extend the latter maximum to cover also the case , it is sufficient to consider also the empty subarray . This is done in line 5 by assigning current_sum as the new value of current_sum, which after that holds the maximum over all of the sum .

Thus, the problem can be solved with the following code,[1]: 74 [4]: 211 expressed here in Python:

def max_subarray(numbers):

best_sum = 0 # or: float('-inf')

current_sum = 0

for x in numbers:

current_sum = max(0, current_sum + x)

best_sum = max(best_sum, current_sum)

return best_sum

This version of the algorithm will return 0 if the input contains no positive elements (including when the input is empty). For the variant of the problem which disallows empty subarrays, best_sum should be initialized to negative infinity instead[1]: 78,171 and also in the for loop current_sum should be updated as max(x, current_sum + x).[12] In that case, if the input contains no positive element, the returned value is that of the largest element (i.e., the least negative value), or negative infinity if the input was empty.

The algorithm can be modified to keep track of the starting and ending indices of the maximum subarray as well:

def max_subarray(numbers):

best_sum = 0 # or: float('-inf')

best_start = best_end = 0 # or: None

current_sum = 0

for current_end, x in enumerate(numbers):

if current_sum <= 0:

# Start a new sequence at the current element

current_start = current_end

current_sum = x

else:

# Extend the existing sequence with the current element

current_sum += x

if current_sum > best_sum:

best_sum = current_sum

best_start = current_start

best_end = current_end + 1 # the +1 is to make 'best_end' exclusive

return best_sum, best_start, best_end

In Python, arrays are indexed starting from 0, and the end index is typically excluded, so that the subarray [22, 33] in the array [-11, 22, 33, -44] would start at index 1 and end at index 3.

Because of the way this algorithm uses optimal substructures (the maximum subarray ending at each position is calculated in a simple way from a related but smaller and overlapping subproblem: the maximum subarray ending at the previous position) this algorithm can be viewed as a simple/trivial example of dynamic programming.

The runtime complexity of Kadane's algorithm is .[1]: 74 [4]: 211

Generalizations

Similar problems may be posed for higher-dimensional arrays, but their solutions are more complicated; see, e.g., Takaoka (2002). Brodal & Jørgensen (2007) showed how to find the k largest subarray sums in a one-dimensional array, in the optimal time bound .

The Maximum sum k-disjoint subarrays can also be computed in the optimal time bound .[13]

See also

References

- ^ a b c d e f g h Jon Bentley (May 1989). Programming Pearls (2nd? ed.). Reading, MA: Addison Wesley. ISBN 0-201-10331-1.

- ^ a b Bentley, Jon (1984). "Programming Pearls: Algorithm Design Techniques". Communications of the ACM. 27 (9): 865–873. doi:10.1145/358234.381162.

- ^ by using a precomputed table of cumulative sums to compute the subarray sum in constant time

- ^ a b c d David Gries (1982). "A Note on the Standard Strategy for Developing Loop Invariants and Loops" (PDF). Science of Computer Programming. 2: 207–241.

- ^ Richard S. Bird (1989). "Algebraic Identities for Program Calculation" (PDF). The Computer Journal. 32 (2): 122–126. doi:10.1093/comjnl/32.2.122.

{{cite journal}}: Cite has empty unknown parameter:|month=(help) Here: Sect.8, p.126 - ^ Tamaki, Hisao; Tokuyama, Takeshi (1998). "Algorithms for the Maximum Subarray Problem Based on Matrix Multiplication". Proceedings of the 9th SODA (Symposium on Discrete Algorithms): 446–452. Retrieved November 17, 2018.

- ^ Backurs, Arturs; Dikkala, Nishanth; Tzamos, Christos (2016). "Tight Hardness Results for Maximum Weight Rectangles". Proc. 43rd International Colloquium on Automata, Languages, and Programming: 81:1–81:13. doi:10.4230/LIPIcs.ICALP.2016.81.

{{cite journal}}: CS1 maint: unflagged free DOI (link) - ^ named

MaxEndingHerein Bentley (1989), andcin Gries (1982) - ^ named

MaxSoFarin Bentley (1989), andsin Gries (1982) - ^ This sum is when , corresponding to the empty subarray .

- ^ In the Python code, is expressed as

x, with the index left implicit. - ^ While the latter modification is not mentioned by Bentley (1989), it achieves maintaining the modified loop invariant

current_sumat the beginning of the th step. - ^ Bengtsson, Fredrik; Chen, Jingsen (2007). "Computing maximum-scoring segments optimally" (PDF). Luleå University of Technology (3).

- Brodal, Gerth Stølting; Jørgensen, Allan Grønlund (2007), "A linear time algorithm for the k maximal sums problem", Mathematical Foundations of Computer Science 2007, Lecture Notes in Computer Science, vol. 4708, Springer-Verlag, pp. 442–453, doi:10.1007/978-3-540-74456-6_40.

- Takaoka, Tadao (2002), "Efficient algorithms for the maximum subarray problem by distance matrix multiplication", Electronic Notes in Theoretical Computer Science, 61: 191–200, doi:10.1016/S1571-0661(04)00313-5.

- Sung Eun Bae (2007). Sequential and Parallel Algorithms for the Generalized Maximum Subarray Problem (PDF) (Ph.D. thesis). University of Canterbury..

External links

- TAN, Lirong. "Maximum Contiguous Subarray Sum Problems" (PDF).

{{cite web}}: Cite has empty unknown parameter:|dead-url=(help)[dead link] - Mu, Shin-Cheng (2010). "The Maximum Segment Sum Problem: Its Origin, and a Derivation".

{{cite web}}: Cite has empty unknown parameter:|dead-url=(help) - "Notes on Maximum Subarray Problem". 2012.

{{cite web}}: Cite has empty unknown parameter:|dead-url=(help) - www.algorithmist.com

- alexeigor.wikidot.com

- greatest subsequential sum problem on Rosetta Code

- geeksforgeeks page on Kadane's Algorithm

![{\displaystyle \sum _{x=i}^{j}A[x]}](https://backend.710302.xyz/https/wikimedia.org/api/rest_v1/media/math/render/svg/3f4ae4590a685044ab6ba7cc8b23cc7c57f5689e)

![{\displaystyle A[1...n]}](https://backend.710302.xyz/https/wikimedia.org/api/rest_v1/media/math/render/svg/c45383ff1324d8ac18764dcf416756ed4ec2e3f2)

![{\displaystyle A[1...j]}](https://backend.710302.xyz/https/wikimedia.org/api/rest_v1/media/math/render/svg/93b779660d137ade89e32fc9377b1443ff987654)

![{\displaystyle A[i]+...+A[j-1]}](https://backend.710302.xyz/https/wikimedia.org/api/rest_v1/media/math/render/svg/29444fa72722e6350c5182199a06567f433f06b5)

![{\displaystyle +A[j]}](https://backend.710302.xyz/https/wikimedia.org/api/rest_v1/media/math/render/svg/f276f74cd74ae67e23c51b1e9ef3764c5798adfd)

![{\displaystyle A[i]+...+A[j]}](https://backend.710302.xyz/https/wikimedia.org/api/rest_v1/media/math/render/svg/e7df4e6ffaae2441be27e7824f261f1c03a5d89b)

![{\displaystyle A[j+1\;...\;j]}](https://backend.710302.xyz/https/wikimedia.org/api/rest_v1/media/math/render/svg/bbd347cbf367334f675cb1516e8b40f897509f3d)

![{\displaystyle +A[j])}](https://backend.710302.xyz/https/wikimedia.org/api/rest_v1/media/math/render/svg/188c430a18abd0a38d9b527d13d3abd631d0d51e)

![{\displaystyle S[k]=\sum _{x=1}^{k}A[x]}](https://backend.710302.xyz/https/wikimedia.org/api/rest_v1/media/math/render/svg/9ec8da87a55750ee8f1489589facd90c9bb8bfee)

![{\displaystyle \sum _{x=i}^{j}A[x]=S[j]-S[i-1]}](https://backend.710302.xyz/https/wikimedia.org/api/rest_v1/media/math/render/svg/a5eaeb881301c21c223bcda980954ff927f9f6b9)

![{\displaystyle A[j\;...\;j-1]}](https://backend.710302.xyz/https/wikimedia.org/api/rest_v1/media/math/render/svg/4fea34a5226327c9f796ba95ee12bfa0908f4280)

![{\displaystyle A[j]}](https://backend.710302.xyz/https/wikimedia.org/api/rest_v1/media/math/render/svg/9c385fd9b0f771529e587f216f3f15d52bb85ef6)

![{\displaystyle =\max _{i\in \{1,...,j\}}A[i]+...+A[j]}](https://backend.710302.xyz/https/wikimedia.org/api/rest_v1/media/math/render/svg/5ecd64c15844772c73f9c1a76a75ceed97b6e58a)